Blockchain and Decentralized Systems: A Transparent Future

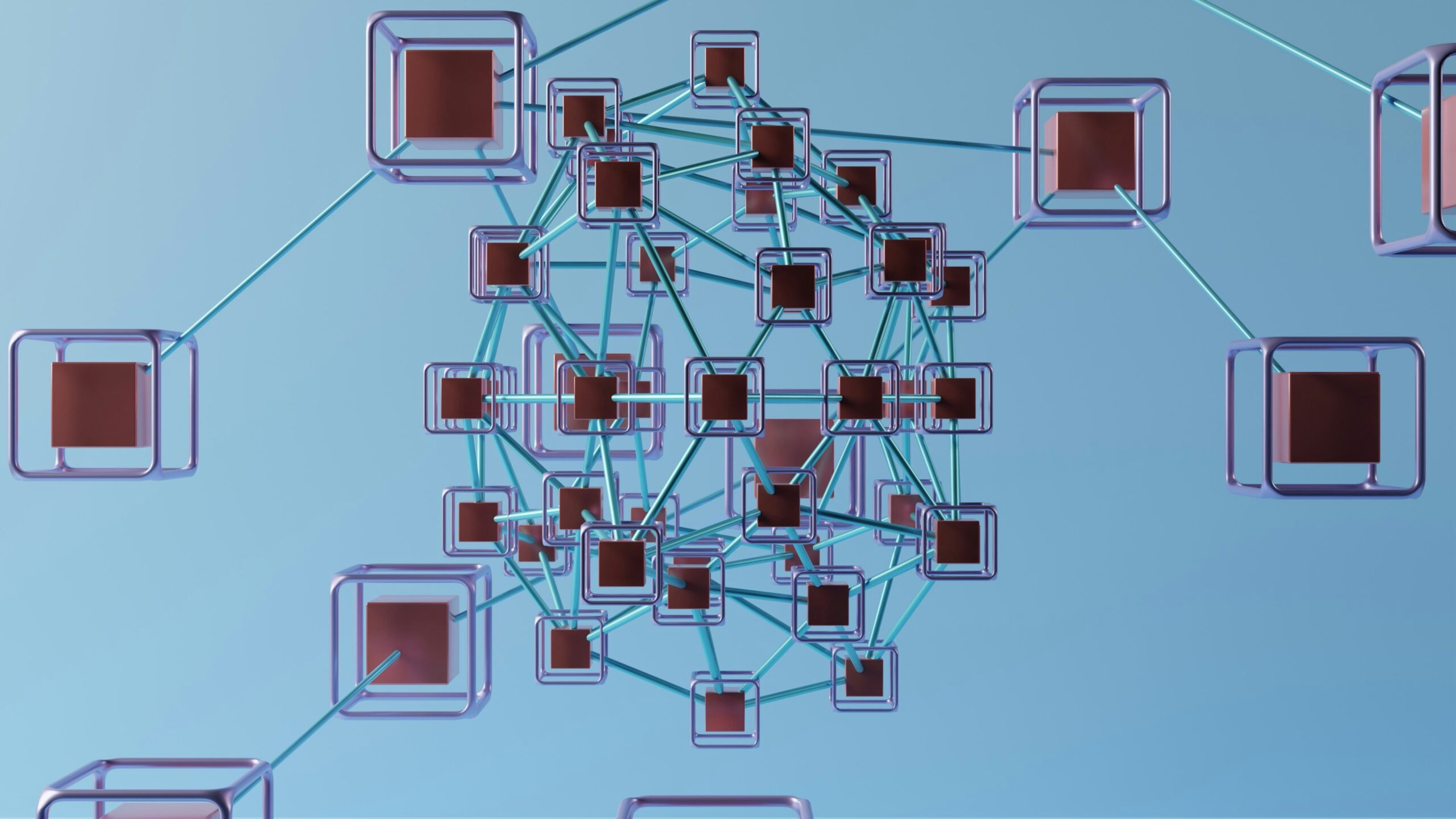

Introduction to Blockchain Technology Blockchain technology represents a revolutionary approach to data management and transactional processes across various industries. At its core, blockchain is a decentralized system that securely records information across multiple computers, ensuring that the data is not controlled by a single entity. This distributed ledger technology offers transparency and security, key characteristics that facilitate a myriad of applications beyond its initial cryptocurrency context. The fundamental architecture of blockchain consists of three core components: the distributed ledger, smart contracts, and cryptographic security. The distributed ledger, or the blockchain itself, is a chain of blocks where each block contains a list of transactions. Every time a new transaction occurs, it is added as a new block, linked to the previous one, thus creating an immutable record. This chain is duplicated across all nodes in the network, ensuring that all participants have access to the same information, which enhances trust among users. Smart contracts are another essential aspect of blockchain and decentralized systems. These self-executing contracts with the terms of the agreement directly written into code facilitate automatic and transparent execution of agreements when predefined conditions are met. This automation reduces the need for intermediaries, thereby lowering costs and increasing efficiency across various transactions. Lastly, the cryptographic security embedded in blockchain technology ensures the integrity and confidentiality of the data. Each block is encrypted and linked to the previous block using cryptographic hash functions, making the information immutable, verifiable, and secure against tampering or unauthorized access. In summary, blockchain technology serves as a powerful tool for fostering more open, secure, and efficient systems across various sectors, paving the way for a transparent future in data management and collaboration. Understanding Decentralization Decentralization refers to the distribution of authority, control, and decision-making away from a centralized entity or governing body. In contrast to traditional centralized systems, where control is vested in a single organization or individual, decentralized systems empower multiple participants to maintain a collective governance structure. This paradigm shift is particularly significant in the context of blockchain technology, which underpins numerous decentralized systems. Enhanced security, transparency, and resilience against failures characterize the advantages of decentralization. One of the primary benefits of decentralized systems is enhanced security. By distributing data across a network of nodes, these systems minimize the risks associated with data breaches or unauthorized access. In centralized systems, sensitive information is often stored in a single location, creating a lucrative target for cyber threats. Conversely, in a decentralized framework, the data is fragmented and dispersed, making it considerably more challenging for malicious actors to compromise the entire network. Transparency is another crucial advantage of decentralization. In a traditional centralized system, users must place trust in the controlling entity to manage data honestly and ethically. However, decentralized systems, particularly those based on blockchain, provide an immutable and transparent ledger of transactions. Participants can independently verify each transaction, ensuring accountability and fostering trust among users without the need for intermediaries. Furthermore, decentralized systems mitigate the risk of single points of failure. In centralized environments, the failure of a single node can lead to the entire system’s collapse. Decentralization, on the other hand, enables continued operation even if individual nodes experience downtime or outages. This resilience is vital in maintaining system reliability and user confidence in the technology. The Role of Smart Contracts in Decentralized Systems Smart contracts are self-executing contracts with the terms of the agreement directly written into code. Operating on blockchain and decentralized systems, these digital contracts facilitate, verify, and enforce the negotiation or performance of a contract without the need for intermediaries. The automation provided by smart contracts enhances efficiency, reduces costs, and mitigates the potential for disputes, thereby revolutionizing various sectors such as finance, supply chain management, and real estate. In a decentralized environment, smart contracts are executed automatically when predefined conditions are met, ensuring that all parties involved adhere to the agreed-upon terms. This shift allows transactions to occur more swiftly, as the need for traditional enforcement mechanisms is significantly diminished. By eliminating the necessity of intermediaries, such as banks or legal institutions, decentralized systems increase transaction speed and reduce costs associated with manual processing or disputes, providing a seamless experience. The importance of smart contracts extends to their ability to enhance transparency and security. Since these contracts are executed on a blockchain, all transactions are recorded in an immutable ledger, making it nearly impossible to alter or delete contract details once established. This feature not only instills trust among participants but also provides a clear audit trail, which is crucial for compliance and accountability in various industries. Moreover, their versatility allows smart contracts to be utilized in diverse applications, from automating payment processes in cryptocurrency transactions to managing complex supply chains. As decentralized systems continue to evolve, the implementation of smart contracts will play a pivotal role in optimizing operations, reducing errors, and fostering a more cooperative environment among stakeholders. Their capability to streamline workflows is essential as industries increasingly adopt blockchain technology for enhanced operational efficiency. Use Cases of Blockchain and Decentralization Blockchain and decentralized systems are transforming various industries by enhancing transparency, security, and efficiency. In finance, for instance, blockchain is revolutionizing payment systems by enabling real-time transactions without intermediaries. Companies like Ripple are harnessing this technology to facilitate cross-border payments, drastically reducing transaction times and costs. Moreover, blockchain-based solutions are integral in improving the remittance process, allowing individuals to send money across different countries swiftly and securely. In the supply chain sector, blockchain provides a transparent and immutable ledger for tracking products from origin to the end consumer. This technology is particularly beneficial in industries like food and pharmaceuticals, where traceability is crucial. Walmart and IBM, through their Food Trust Network, utilize blockchain to track food products, thereby ensuring safety and compliance while enabling swift recalls when necessary. Such systems not only protect consumers but also optimize inventory management and reduce waste. The healthcare industry is another area where blockchain is making significant strides. By ensuring that patient records are securely stored

Blockchain and Decentralized Systems: A Transparent Future Read More »