Mastering Python NumPy Indexing & Slicing: A Comprehensive Guide

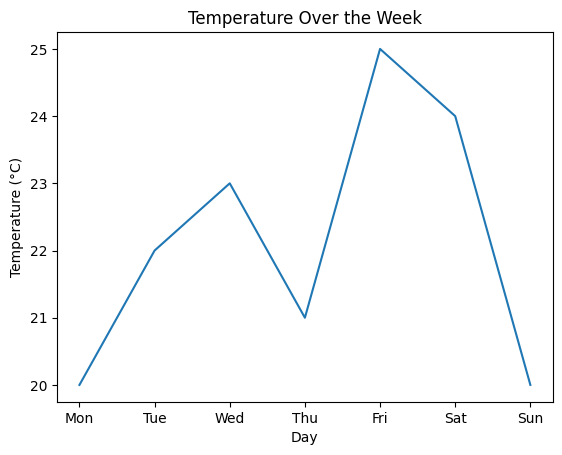

Today, we’re diving into a fundamental aspect of using NumPy effectively: indexing and slicing. Whether you’re analyzing data or processing images, understanding how to manipulate arrays efficiently is key. NumPy offers powerful tools to help you do just that. In this guide, we’ll explore the theory behind indexing and slicing, and then we’ll roll up our sleeves for some hands-on examples. Let’s jump right in! Understanding Indexing and Slicing Before we get into the details, let’s clarify what we mean by indexing and slicing: Understanding these concepts is crucial for working efficiently with arrays, enabling you to manipulate data quickly and effectively. Why Indexing and Slicing Matter Indexing and slicing in NumPy are much more flexible and powerful compared to Python lists. They allow for complex data extraction with minimal code and provide more control over your datasets. This is particularly useful in data analysis, where you often need to work with specific parts of your data. The Basics of Indexing Let’s start with the basics of indexing. Here’s how you can access elements in a NumPy array: One-Dimensional Arrays For a 1D array, indexing is straightforward: Indexing starts at 0, so the first element is accessed with index 0. Multi-Dimensional Arrays For multi-dimensional arrays, indexing uses a tuple of indices: Here, matrix[0, 0] accesses the element in the first row and first column. Negative Indexing NumPy supports negative indexing, which counts from the end of the array: Negative indexing is a convenient way to access elements relative to the end of an array. Advanced Indexing Techniques NumPy also provides advanced indexing capabilities, allowing for more complex data extraction: Boolean Indexing You can use boolean arrays to filter elements: Here, arr > 25 creates a boolean array indicating where the condition is true, and arr[bool_idx] extracts elements where the condition holds. Fancy Indexing Fancy indexing involves using arrays of indices to access elements: This allows you to select multiple elements from an array at once. The Art of Slicing Slicing enables you to extract portions of an array efficiently. The syntax for slicing is start:stop:step. One-Dimensional Slicing Let’s see slicing in action with a 1D array: Here, 1:4 specifies the start and stop indices (exclusive), extracting elements from index 1 to 3. Multi-Dimensional Slicing For multi-dimensional arrays, slicing can be applied along each dimension: This extracts the first two rows and the second and third columns. Step in Slicing You can also specify a step value to skip elements: Here, 0:5:2 extracts elements from index 0 to 4, taking every second element. Omitting Indices Omitting indices allows you to slice to the beginning or end of the array: This is a convenient shorthand for common slicing operations. Practical Applications of Indexing and Slicing Let’s apply what we’ve learned to a practical scenario. Consider a dataset representing temperatures over a week in different cities: In this example, we’ve efficiently accessed and filtered temperature data using indexing and slicing, highlighting how powerful these tools can be in data manipulation. Conclusion Mastering NumPy indexing and slicing is essential for anyone working with data in Python. By leveraging these techniques, you can extract, manipulate, and analyze your data with ease, unlocking the full potential of NumPy’s array capabilities. Next time you work with NumPy arrays, experiment with different indexing and slicing techniques to see how they can streamline your code and enhance your data analysis workflow. I hope this tutorial helps you gain a deeper understanding of NumPy indexing and slicing. Feel free to reach out with any questions or if you need further examples!

Mastering Python NumPy Indexing & Slicing: A Comprehensive Guide Read More »