Shaping Careers Through Industry-Focused IT Training

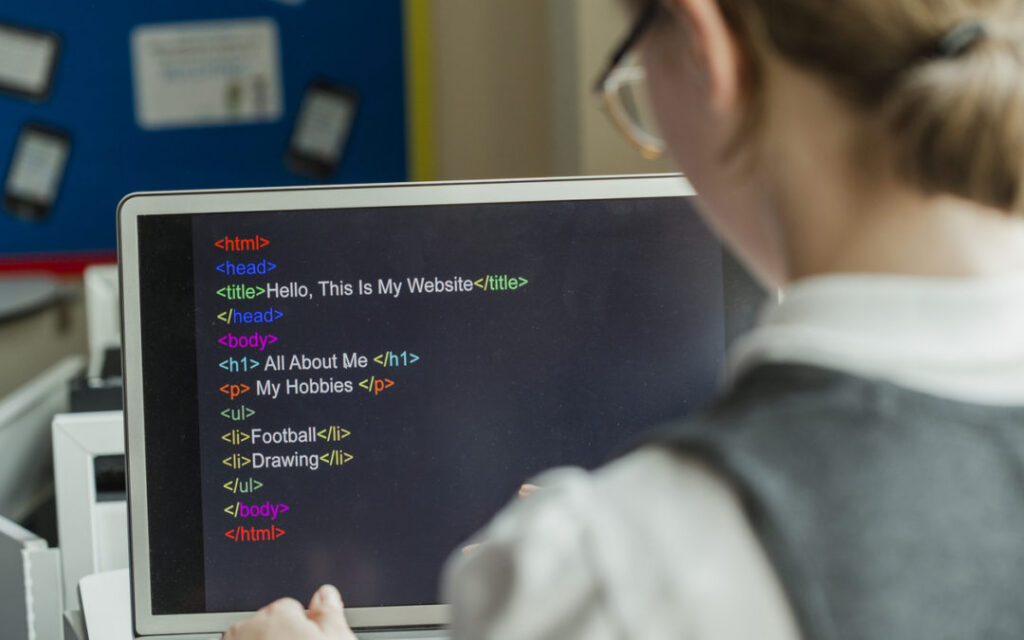

The information technology industry is evolving at an unprecedented pace. New technologies, tools, and job roles are emerging every year, creating exciting opportunities for skilled professionals. However, many students and graduates still struggle to enter the IT field due to a lack of practical knowledge and industry exposure. This is where industry-focused IT training plays a vital role in shaping successful and sustainable careers. The Growing Skill Gap in the IT Industry Despite having academic degrees, many candidates are not considered job-ready by employers. The primary reason is the gap between traditional education and real-world industry requirements. Companies today look for professionals who can work with modern tools, understand live systems, and contribute to projects from the very beginning. Industry-focused IT training is designed to bridge this gap. It aligns learning with current market demands, ensuring that students gain skills that are directly applicable in professional environments. What Is Industry-Focused IT Training? Industry-focused IT training goes beyond textbooks and theoretical concepts. It emphasizes practical learning, real-world applications, and exposure to current technologies used by companies. Such training programs are structured around job roles, industry workflows, and employer expectations. Key elements of industry-focused training include: This approach ensures that learners are prepared for real challenges in the IT workplace. Practical Learning as the Foundation of Career Growth Practical experience is the backbone of industry-focused training. When students work on real projects, they gain a deeper understanding of how concepts are applied in actual scenarios. This helps them develop problem-solving skills, logical thinking, and technical confidence. For example, a data analytics learner becomes more competent by analyzing real datasets, creating dashboards, and generating insights. A software development student gains confidence by building applications, debugging code, and deploying projects. Such experiences play a crucial role in shaping a strong professional profile. Learning Technologies That the Industry Demands The IT job market constantly demands new and relevant skills. Industry-focused training programs concentrate on technologies that have high demand, such as Python, Java, full-stack development, data analytics, cloud computing, artificial intelligence, and machine learning. By learning these technologies in a structured and practical way, students stay ahead of the curve. They become adaptable professionals who can evolve with changing industry trends and take on new roles with confidence. Career Opportunities for Freshers and Career Switchers One of the biggest advantages of industry-focused IT training is that it supports learners from diverse backgrounds. Fresh graduates, non-IT students, and working professionals looking to switch careers can all benefit from this approach. With step-by-step learning paths, beginner-friendly modules, and continuous practice, learners can build strong technical foundations regardless of their academic background. Industry-focused training empowers individuals to start, restart, or accelerate their IT careers. The Role of Mentorship and Career Guidance Mentorship is a critical component of shaping IT careers. Trainers with industry experience provide valuable insights into workplace expectations, project handling, and career growth strategies. They help learners understand how to approach interviews, solve technical problems, and perform effectively in professional environments. Career guidance, resume building, mock interviews, and placement support further enhance a learner’s employability. This holistic approach ensures that students are not only skilled but also confident and job-ready. From Training to Employment Success Industry-focused IT training is designed with a clear goal: employment success. By combining practical skills, industry knowledge, and career support, learners are prepared to meet employer expectations. They develop the ability to adapt, learn continuously, and contribute meaningfully to organizations. This training model transforms learners into professionals who are ready to take on real responsibilities and grow within the IT industry. Conclusion In today’s competitive job market, success in IT depends on relevance, skills, and practical exposure. Industry-focused IT training plays a crucial role in shaping careers by aligning education with real-world demands. Through hands-on learning, updated technologies, expert mentorship, and career support, learners can build strong foundations for long-term success. By choosing the right training approach, aspiring professionals can turn their potential into performance and shape rewarding careers in the ever-growing IT industry.

Shaping Careers Through Industry-Focused IT Training Read More »