Top Reasons to Enroll in ADCA+ for Tech Enthusiasts

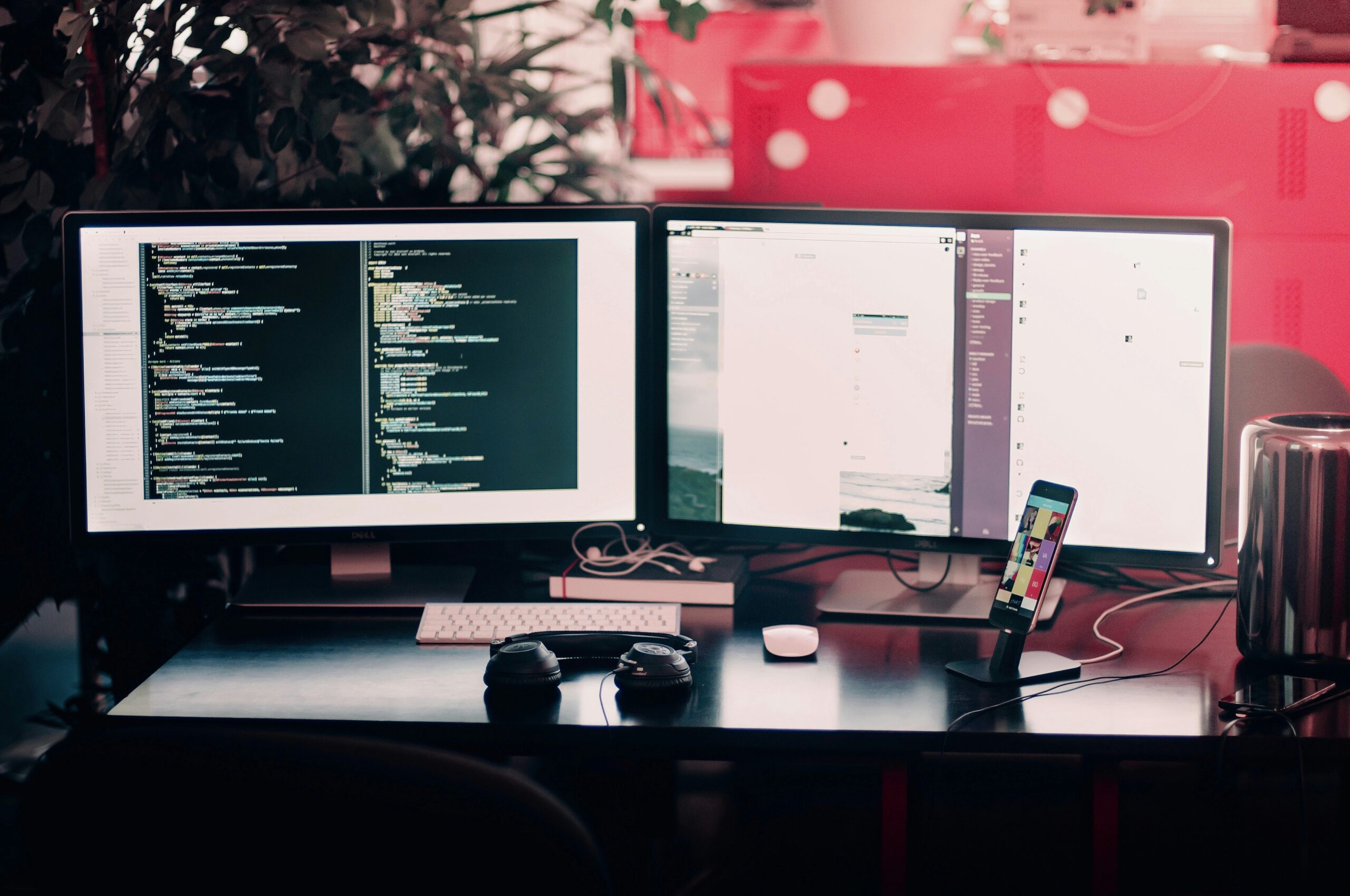

Introduction to ADCA The Advanced Diploma in Computer Applications (ADCA) is a comprehensive program designed to equip individuals with essential skills in computer applications. As technology permeates every aspect of modern life, the demand for proficient computer users has soared in various sectors, including business, education, healthcare, and more. The ADCA program caters to this demand by providing a structured curriculum aimed at enhancing one’s proficiency in essential computer skills. The primary objective of the ADCA program is to impart substantive knowledge about computer fundamentals, software applications, and systems operation. By mastering the components of ADCA, students develop a solid foundation that empowers them to boost their computer skills effectively. This formal qualification not only helps individuals become adept at using computers but also provides a competitive advantage in the job market, where digital literacy is increasingly vital. As organizations increasingly embrace digital transformation, the need for skilled professionals who can navigate technology is paramount. The ADCA program offers an in-depth understanding of various software applications, programming languages, and web technologies, which are critical in this evolving landscape. In doing so, it prepares candidates to meet the expectations of employers looking for individuals who can effectively contribute to tech-driven initiatives. Furthermore, pursuing an ADCA can benefit individuals beyond the mere acquisition of computer skills. It fosters critical thinking and problem-solving abilities, allowing participants to approach challenges analytically. With the integration of practical training, the program ensures that learners can apply their knowledge in real-world scenarios, thereby enhancing their readiness for subsequent career opportunities. This holistic approach is why the ADCA remains a valuable choice for those looking to solidify their expertise and succeed in the digital age. Benefits of Completing an ADCA Completing an Advanced Diploma in Computer Applications (ADCA) offers numerous advantages that significantly contribute to both personal and professional development. One of the most notable benefits is the enhancement of job prospects. In today’s digitized world, employers increasingly seek candidates who possess a solid foundation in computer skills. By mastering the ADCA curriculum, individuals demonstrate their proficiency in various areas, including software applications, programming, and database management, which are essential in many job roles across industries. Moreover, the ADCA program equips learners with enhanced technical skills, allowing them to tackle complex problems with confidence. This empowerment not only makes them more competitive in the job market but also helps in performing daily tasks more efficiently. Developing practical skills related to document preparation, spreadsheet management, and online communication tools fosters a comprehensive understanding of technology that can greatly benefit any workplace environment. In addition to immediate employment advantages, completing the ADCA can also increase one’s confidence in technology usage. Many individuals experience apprehension when it comes to using advanced software or applications. However, through rigorous training and practical applications offered in the ADCA course, learners can overcome these challenges, ensuring they feel competent and assured when utilizing technology for both personal and professional purposes. Furthermore, the ADCA program opens doors to higher educational opportunities and specialized training. Graduates may choose to pursue further education, such as bachelor’s or master’s degrees in related fields, enhancing their academic credentials. This path not only consolidates their existing computer skills but also enables them to delve deeper into areas of interest, ultimately leading to greater career advancement. In summary, the ADCA program significantly contributes to improved job prospects, technical proficiency, enhanced confidence, and the opportunity for further education, collectively enabling individuals to thrive in the technology-driven landscape of today. Key Topics Covered in the ADCA Curriculum The ADCA (Advanced Diploma in Computer Applications) curriculum encompasses a wide array of subjects designed to equip students with essential knowledge and skills to accelerate their careers. One of the foundational modules is Computer Fundamentals, where learners gain a solid understanding of computer components, operating systems, and basic software applications. This foundational knowledge is crucial for anyone looking to boost their computer skills effectively. Following the basics, the curriculum includes Programming Languages, which introduces students to popular programming languages such as C, C++, and Java. This module emphasizes not only syntax but also problem-solving approaches that enable students to develop applications. Mastering these languages opens up numerous opportunities in the tech field, as programming remains a core skill in the industry. Database Management is another critical area of the ADCA course. This segment provides insights into database design, management systems such as SQL, and data manipulation techniques. Understanding how to efficiently design and manage databases is indispensable for any aspiring IT professional, and it significantly enhances their ability to handle large volumes of data. Web Technologies, including HTML, CSS, and JavaScript, are also integral to the ADCA program. This section focuses on creating and managing web content, as well as understanding the principles of web development. Knowledge gained in this area allows students to build interactive websites and contributes to their overall digital literacy. Finally, Software Applications explore various commonly used software tools, providing students practical skills applicable in everyday business scenarios. Topics may include word processing, spreadsheets, and presentation design, all vital for enhancing productivity in any professional environment. Collectively, these modules reflect a comprehensive approach to computer education, empowering students to effectively master ADCA and boost their computer skills. Who Should Pursue an ADCA? The ADCA program, or Advanced Diploma in Computer Applications, is designed to cater to a wide array of individuals looking to boost their computer skills. Firstly, fresh graduates, especially those who have a background in commerce or science, can significantly benefit from this program. With the rapid advancement of technology, employers now seek candidates who possess not only theoretical knowledge but also practical skills. By pursuing the ADCA, recent graduates can enhance their employability and stay relevant in a competitive job market. Working professionals also form a key demographic for the ADCA program. For individuals already in the workforce, continual professional development is essential to keep pace with industry changes. The ADCA offers an opportunity for these professionals to upskill and acquire new competencies in software applications and computer technologies.

Top Reasons to Enroll in ADCA+ for Tech Enthusiasts Read More »